Our attention as an industry has been focused on Artificial Intelligence (AI) and Machine Learning (ML) for a couple of years now. Microsoft has emerged as the clear market leader, bolstered by its partnerships with OpenAI and Nvidia. Thanks to the CUDA SDK, Nvidia has been the undisputed leader in AI hardware, allowing what was primarily gaming hardware to be used as a massively parallel computing platform. The competition in this market space (AMD and Intel) has struggled to gain traction with their offerings. Similarly, OpenAI has emerged as the undisputed leader in Large Language Models (LLMs) and Generative AI. With their GPT3/GPT4 foundation models, the competition (Meta, Google, and Mistral) still struggles to match the capabilities of the previous generation of models from OpenAI. Microsoft also has Azure cloud offerings that are best in class, including Azure Machine Learning, Azure Cognitive Services, Azure Cognitive Search, Azure AI Studio, and Azure Open AI.

With this knowledge, I was surprised to see Microsoft diversifying its hardware and software positions in the marketplace, with numerous announcements from competitors to both OpenAI and Nvidia. This was especially surprising on the model side, considering Microsoft’s ownership stake in OpenAI. Reflecting on these moves, though, I believe this makes a lot of sense since both OpenAI and Nvidia have shown weakness that has put Microsoft’s market-leading position at risk. Nvidia has struggled to deliver enough GPUs to meet the demand for training and inference tasks in the AI space. Anyone who has tried to get GPU compute in Azure can attest to how much higher the demand is relative to the available supply. This isn’t for lack of trying; Microsoft currently opens around 100 data centers annually worldwide. However, the GPU compute supply has been constrained by Nvidia’s ability to supply GPUs, limiting the AI compute in these data centers.

OpenAI has similarly shown weakness recently. When founded, one of its founding principles was that the board of directors would create a check and balance on the commercialization of AI. The reasoning was simple: they felt that AI development driven solely by profit and commercialization would pose a global danger. At the time, this seemed like the concern of someone who watched too many sci-fi movies, but as each new generation of AI models is created, this concern appears more and more valid. As many of you saw a few months ago, the board of directors felt the need to act and fired Sam Altman, the CEO and visionary of OpenAI, only to have the decision reversed a few days later, with numerous board members being replaced in the process. The details of what happened were never publicly shared, but this raised concerns about the fragility of OpenAI as an organization.

Microsoft has made two significant moves in the hardware space. First, they have announced a partnership with AMD to use and deploy their GPUs. This means that their offerings will need to add support for ROCm or a CUDA compatibility layer, but it enables Microsoft to purchase more off-the-shelf GPU compute from the second largest supplier. The more exciting move is that Microsoft has begun creating its own hardware, the Maia 100 chip. The surprise about the announcement of the Maia was that significant amounts of inference were already taking place on these chips in Azure data centers. What is unique about this chip over AMD and Nvidia is that this is not a gaming GPU with the ability to run scientific applications; this is compute designed for AI operations. I suspect this will allow the chip to run cooler and more efficiently than AMD and Nvidia offerings, although numbers have not been released. This means that Microsoft Azure currently has three sources of GPU compute from Nvidia, AMD, and Microsoft. This puts them at a distinct advantage in acquiring AI compute, which they need since they currently handle most of the world’s AI workloads.

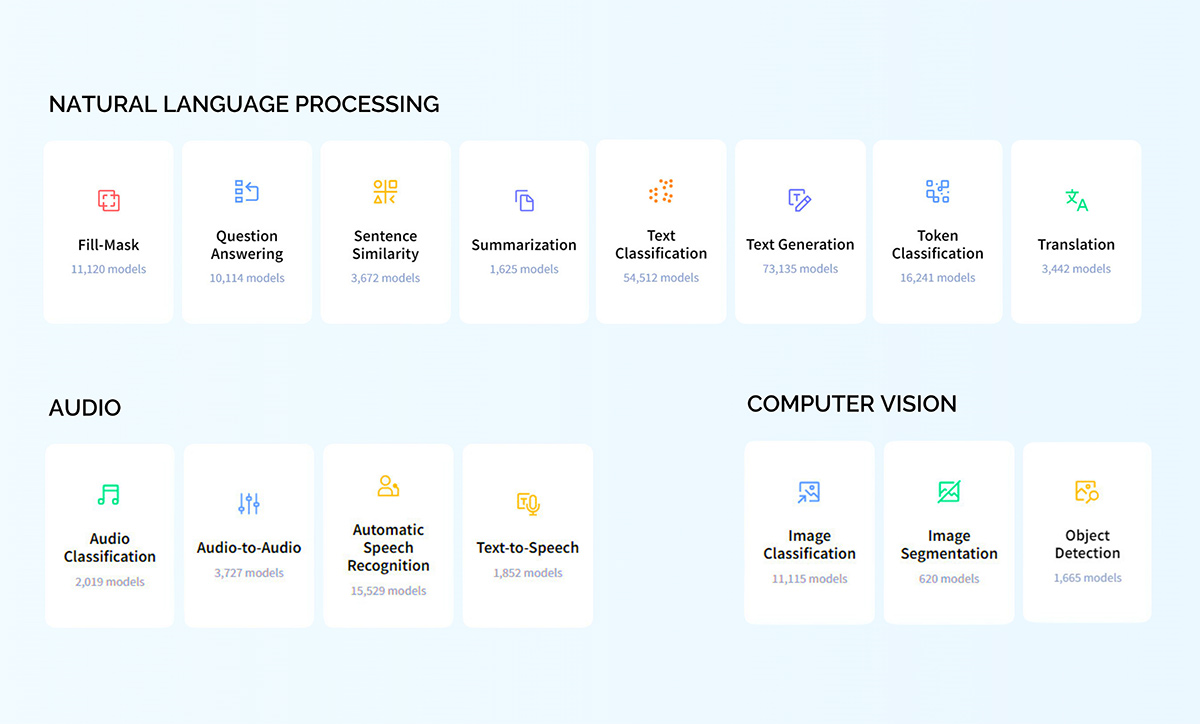

In the software space, Microsoft’s strength is its access to OpenAI models, which makes the same great models available in Azure with the addition of enterprise-level security features. Microsoft has established a partnership with Hugging Face, which has resulted in the “Hugging Face on Azure” offering. I’ve come to think of Hugging Face as GitHub for ML models, bringing open-source sensibilities to the world of AI/ML. Their Azure offering takes this to the next level by allowing for a one-click deployment of tens of thousands of models from Hugging Face in a production-ready manner. This suddenly makes Azure the home of not only the best models in existence through OpenAI but also a variety of models from Hugging Space that target much more than LLMs, chasing specific use cases, accuracies, cost, and reliability.

Microsoft has also established a partnership with Mistral AI, a Paris-based AI startup that is a direct competitor of Open AI. Mistral is unique among the competitors of OpenAI in that its models are catching up to it very rapidly. Microsoft’s investment in Mistral will help the company with the funding it needs to catch up and become a premier foundation model that can compete with GPT-4. This partnership has resulted in Mistral’s closed source models being available on Azure, resulting in Microsoft having both of the most exciting, closed source LLM models available in Azure. These Azure versions are suitable for enterprise usage and diversify the off-the-shelf models available in Azure.

Microsoft has also hired Mustafa Suleyman to head its consumer artificial intelligence business. Suleyman co-founded the AI research lab DeepMind and is an influential figure in the AI space. This is a similar move to what Microsoft has done in the hardware space. They partnered with the best (OpenAI/Nvidia), partnered with an exciting up-and-comer (Mistral/AMD), and are working to build their own internal competency (Maia/Suleyman). These moves demonstrate that Microsoft is focused on maintaining its leadership position in the AI space and is hunkering down for the long term to have the best models and the best platform to train and perform inference.

It might seem that OpenAI should be nervous as Microsoft diversifies its strategy, but Microsoft is heavily investing in the next generation of OpenAI. It’s common knowledge that Microsoft provided the compute for OpenAI to train their models as part of Azure. What most people don’t realize is that they accidentally created the third-largest supercomputer in the world, causing them to hold the #3 spot briefly. Microsoft and OpenAI are doing it again. This time, they are designing the “Stargate” AI data center, a $100 billion project that will be prepared to train future generations of OpenAI models. They are currently targeting 2028 for this data center to come online. It’s hard even to imagine what the next generation of models that could come out of that data center will look like. However, it will likely be industry-leading, and the OpenAI and Microsoft partnership is still the foundation model to watch and the closest to achieving AGI.

There are so many exciting innovations coming in AI/ML, but so much is already established and ready for use in the enterprise. Does your company need help figuring out how to navigate the AI/ML landscape? New Resources Consulting possesses the depth of knowledge and industry experience to guide you through the complexities of AI and ML. We are committed to partnering with you to uncover the most effective solutions tailored to your enterprise’s unique needs.

Embrace the future of AI with confidence. Reach out to New Resources Consulting today, and let’s embark on a journey to harness the transformative power of AI/ML for your business.